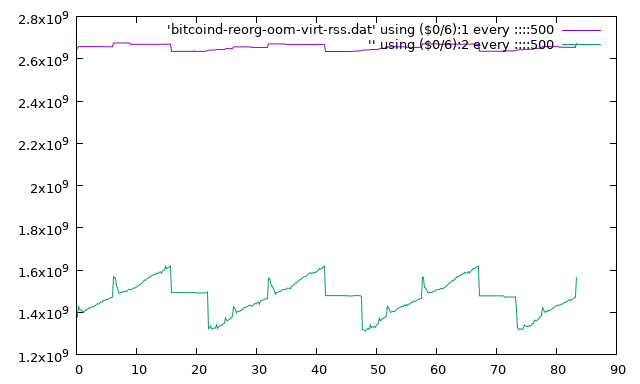

To whet the appetite, have a look with me at this plot (click through for the underlying data):

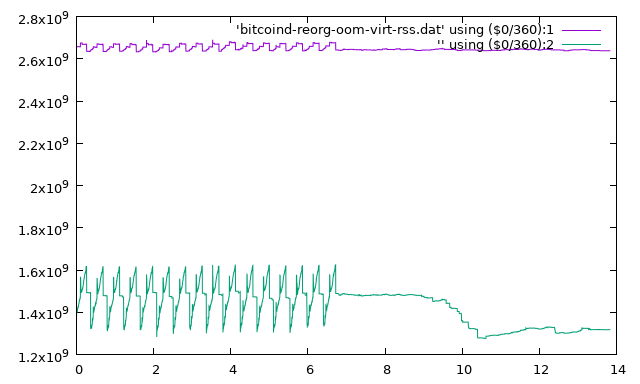

What is this, some exercise in audio signal processing featuring the tail end of a glitchy sawtooth wave? The pulse monitor of a patient who didn't make it? Why no; it's the peculiar memory usage pattern of a particular Bitcoin node, both by the broadest measure of total virtual address space mappings ("VIRT") and the narrower resident set size ("RSS"), sampled roughly every 10 seconds over a 14-hour interval.(i) Let's zoom it in to just the first 500 samples or 83 minutes:

So what's going on here? The patient had reported to me with the usual symptom of being stuck, well behind the active blockchain and unable to make progress accepting any new blocks, and thus reduced to a museum piece, frozen in time and unable to participate meaningfully in future activity. The debug log showed it was looping on a failed reorganize attempt. Finding the initial cause was easy: a consistent I/O error when reading a particular block from the filesystem. This was reproducible by a simple cat >/dev/null of the relevant .blk file, indicating an uncorrectable error reported by the storage medium, a Samsung SSD. The recommendation was thus to restore from the last backup. That wasn't even too time-consuming since the affected file happened to be a long completed block collection file,(ii) then the node passed a full readback test using -checkblocks=0 and proceeded with its reorganization.

But then things got interesting. The reorganization ran, and ran... and ran... and failed, with an

************************

EXCEPTION: 11DbException

Db::get: Out of memory

bitcoin in ProcessMessages()

after which it would go right back and try again, triggered by receipt of a new catchup block from the network. It didn't look like the machine was that low on memory, but I had the parts on hand to upgrade the Thinkpad from 4 to 8 GB, so I lent them to the cause; still no dice. So we started collecting the memory log, on the idea that we simply weren't checking at the right time. The steady increase, particularly in RSS, through the course of a cycle certainly pointed to some runaway memory usage, yet at no point did it come anywhere close to the available capacity of the machine. Another question was why each cycle took so long; the debug log offered no clues whatsoever to the shape or progress of the lengthy reorg process. So adding some new log messages was an obvious first step.

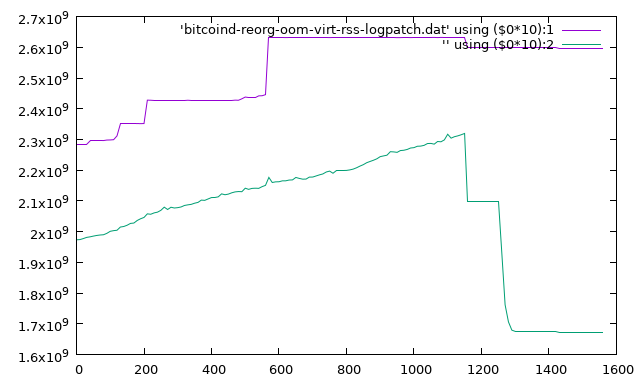

Unfortunately, on restarting with the new code, the node received no new relevant blocks from the network by which to re-trigger the reorganize, only unconnectable "bastard" blocks. Basically, due to the behavior of the broken block download process, the peer node will only announce some limited number of blocks ahead of the requesting node's current tip; if it "already has" them - notwithstanding that it failed to connect them for its own transient reasons - it concludes that there's nothing to do. This is also why the sawtooth pattern stops in the memory plot; it ran out of "free preview" blocks to look at. So we had to bypass the network protocol by looking up the height of the block hash that triggered the last reorg attempt, dumping the subsequent block from a healthier node, and feeding it into the afflicted node using eatblock. At least this made for a "cleaner" run, with a freshly started bitcoind process going through a single reorg attempt with no network connections:

The now much more helpful debug log revealed the shape of the fork causing the reorg: not actually a fork at all, with no blocks to disconnect and 501 to connect. It just happens that in this situation where the node previously stored but failed to fully connect a block (perhaps due to external error or interruption), the fact that the next block it sees doesn't directly connect to its tip means it can't do a simple ConnectBlock, so it dispatches to the more general Reorganize routine. This handles all the pending blocks in one shot - specifically, in one database transaction as well as one critical section (see also ye olde map). This certainly explained the long cycle duration. Further, there was an obvious memory drain in the form of temporary transaction lists - built up by Reorganize so as to later update the in-memory transaction pool if the reorg proves successful i.e. the proposed longer chain is valid - which could account for at least part of the near-linear ramps observed in RSS.

That all may suggest some possible improvements; but so far none of it is fatal and we seem no closer to finding the cause of the failure. Further, the situation made for a rare and valuable test of node resilience under deep blockchain fork conditions. Even if this particular case could be optimized to connect blocks incrementally and avoid the need to reorganize, the more general and quite important case cannot, as things currently stand. A node that fails to follow the longest valid proof-of-work chain, howsoever inconvenient or costly that may be, is not an honest Bitcoin node and does not implement the protocol, as widely understood and as required to maintain consistency, distributed consensus, a single fungible currency without trusted third parties and all that. In short, given enough time, Reorganize must either succeed or else prove the proposed chain invalid, without requiring significantly more working memory than is normally available to the node, no matter how many blocks there are to disconnect or reconnect.

The enhanced log output also revealed that the crash came after connecting 210 blocks of the 501 total, ruling out at least the fairly opaque step of the Berkeley DB transaction commit as the culprit, since the code never got that far.

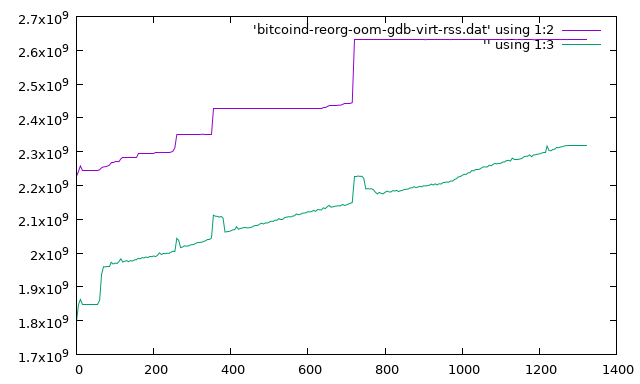

Could it be that we still weren't looking "fast enough", that there was some single ginormous allocation request or else a rapid succession of large ones just prior to raising the exception? Not knowing where exactly the exception was coming from was one obstacle, and adding an exception handler at a higher level wouldn't necessarily help, because the stack would already unwind with C++ destructors freeing everything up by the time control entered the handler. So I found the magic gdb syntax for breaking at the exact point an exception is raised/thrown. Documentation was sparse, and it was supposed to allow filtering for a specific type of exception but that didn't work. Fortunately there weren't any exceptions arising during normal operation of the code to get in the way, so it ran until hitting the out of memory (OOM) and yielded up a gdb prompt.

I poked around, gathering information about execution state at various levels, and saved the relevant log messages for good measure. The memory data proved that there was not in fact any such narrow spike just before death:

This left no choice but to dig into what I'd been rather hoping to avoid: Berkeley DB or the related code, following the clue that the error consistently originated there. This revealed the true problem surprisingly quickly. It had been hiding in plain sight, in BDB's own separate ~/.bitcoin/db.log : "Lock table is out of available object entries". That's a straight flashback to nine years ago, to what was arguably the single most impactful change to come out of "The Real Bitcoin" project: asciilifeform_maxint_locks_corrected.vpatch. So it's time to settle in for a little history lesson.

Some years prior, a cast of Github-based muppets (calling themselves "The Bitcoin Developers", or "The Bitcoin Core Developers" for long, but better known in civilized parts as the Power Rangers), as part of a steady stream of reckless changes in their fork of Satoshi's code, had swapped out databases, replacing (partially!) the relatively mature BDB with some fashion-of-the-day Google thing. They then pushed miners to raise their "soft" block size limit (i.e. what size of blocks to produce, as opposed to the "hard" limit of what size is accepted as valid from others), because The People needed it, you know. These new, bigger blocks had disastrous unforeseen consequences, causing nodes still running the BDB implementation to exhaust self-imposed limits on lock resources and fail to accept certain blocks, reportedly even in a non-deterministic way i.e. depending on the exact history of each node's database. This resulted in relatively long-lived forks in the network, and fed into the propaganda that you "had to upgrade" - to the version pushed by the very ones who broke it in the first place through their aggressive changes to things they didn't understand.(iii)

On the contrary, you could simply adjust the BDB configuration and set larger limits. But how large was "larger" ? Too high and it would suck up so much memory that it couldn't run at all; too low and it might appear to work today but fail again tomorrow. So the problem kept recurring, for TRB nodes along with any others running old code, up until Mircea Popescu condescended to share the magic numbers that he'd been using, reportedly based on the theoretical maximums of a 1MB block. The TRB project, carrying the baton of maintaining the unfucked codebase from before the database switch, took the numbers at face value, codified them and declared victory. And indeed, no single block has been seen to run afoul of the limits ever since, despite ample attempted spamming attacks against the network.

But now we see the gap. What is enough for a single block is not enough for a series of blocks processed in a single database transaction; as the docs note,

transactions accumulate locks over the transaction lifetime, and the lock objects required by a single transaction is the total lock objects required by all of the database operations in the transaction.

Since there is no limit on the number of blocks in a reorg and thus the number of database pages touched in the transaction, there can be no sufficient values for the limits on locks and lock objects.

At this point the question practically screams out loud: what possible need is there to be locking millions of distinct items, in an application which uses only a handful of threads, doing only a handful of distinct tasks, of which the heaviest are infrequent, and nearly everything is blocked out in a critical section anyway? Basically, BDB is trying to provide fine-grained locking, so that you know, there COULD be a high degree of concurrency, with multiple readers and even writers as long as they're touching different parts of the database. Alternatively, it provides the simpler, multiple reader/single writer model through the Concurrent Data Store "product"; but for whatever stupid reasons, this cannot be combined with the required transaction subsystem.

Practically speaking, this left us with two options. Either we stop using BDB's locking system altogether, or else we break the reorganize algorithm down to do a limited amount of work per transaction. There's a lot to be said for the second route: massive long-lived transactions are the enemy of concurrency and response time in a database; further, at present the process can't be interrupted during a lengthy reorg or else it "loses its place" and has to restart from the beginning. On a closer look though it gets thorny. If the reorg is split up, then external queries can actually see the blockchain rewinding into the past, "getting worse before it gets better", with consequences unclear. What's more, in the current implementation it's not possible to know up front whether the proposed chain is even valid and thus whether the reorg will succeed. If it fails, then all the externally-visible noise and reversed transactions were for naught and we have to explicitly retrace our steps, hopping back to where we started one block at a time. I had some thoughts on how one could resolve at least the uncertainty:

jfw: this could perhaps be assured by fully checking blocks prior to admitting them into the database, i.e. no more hopeful blocks

jfw: which might require changes to how transactions are indexed, at minimum indexing also the transactions from accepted but inactive blocks off the main chain, so that downstream blocks have what to reference

jfw: and "where a transaction is spent" would no longer be an atom but a list of potentially many downstream blocks(iv)

jfw: further there's the spamming issue where any number of transactions & blocks could be spewed downstream of the early chain where difficulty is low, and the node now has to index all those transactions rather than just storing the block data as it does already

jfw: this together with the unreliable block download does seem to point to headers-first sync, i.e. show me the full proof of work chain that puts your blocks in the lead before I'm interested in what they containjfw: with the contemplated change to transaction indexing, the reorg itself might get a lot faster, if all that's needed is updating the block index flags for which blocks are active

jfw: and mempool updates

Even if all the details can be dealt with acceptably, it's clear that this would be quite an involved and invasive change, requiring perhaps a rewrite of the full transaction database (confusingly stored in blkindex.dat) on first run to update the structure, breaking compatibility. Moreover, the codebase is still such a long way from clean and comprehensible that the costs of validating such a change are likely to be significant.

On the other hand, if BDB's locks can be disabled and suitably replaced without giving up transaction support, it would solve the immediate problem without making things any worse. It might further entrench the lack of concurrency in the threading code, but then, nothing stops us from turning them back on later if sufficient need arises to justify a more refined solution.

As it turns out, they can indeed be so disabled. The catch is that we then become responsible for ensuring that no thread can access the database while another is working with it. What exactly "working with it" means is not fully clear, so we can take a broad approach: any code that constructs a CDB object, or one its derived classes CTxDB, CAddrDB, or CWalletDB, must be mutually excluded from other threads doing the same for the full scope of that object. On exit from such scope the ~CDB destructor runs, calling CDB::Close and aborting any unfinished transaction. This suggests an automated solution, acquiring a global lock in the constructor and releasing it in the destructor. Unfortunately this would not be a "leaf" lock, that is, the calling code might attempt to acquire other locks while it is held, raising the possibility of deadlock (suppose thread A holds cs_main then attempts to construct a database handle, while thread B has a database handle then attempts to acquire cs_main; neither can progress because each waits for the other). So it doesn't spare us the need to look at how the locks are actually used. Further, we also need to consider direct calls to dbenv, the global database environment handle.

The good news, such as it is, is that mutex locks (in the form of "critical sections") are used so coarsely throughout the bitcoind code that we're most of the way there already. First, observe that all calls to ProcessMessage, ProcessBlock, SendMessages, and RPC commands are fully guarded by cs_main. Then, anything done in the body of AppInit2 (prior to its final CreateThread calls) is running at startup in the main thread before there are any other threads. Intuitively, this covers almost everything because what else is there to cause the daemon to wake up and do anything at all? But intuition alone and "almosts" aren't going to cut it here.

Stay tuned for the deep dive!

- I really just meant "let it run for at least one or two full cycles", not "let it run overnight" but I guess I didn't make that fully clear or else attention turned to other things, and so we get the whole picture. [^]

- The blk####.dat files don't change, only grow by appending until full; blkindex.dat is another story entirely. [^]

- I must confess I was among those hoodwinked at the time, not having realized that the project had been hijacked and figuring it was simply a bug in the old code. [^]

- In this expanded index structure, to "pre-check" a candidate block off the main chain, it's more complex to check whether a previous output is spent: you have to check each block in its "spent-by" list to see if that block is on the branch being extended. The naive way to do that is to walk the block index back from the branch tip in question; but since this is required for potentially multiple referenced blocks for each input of each transaction in the candidate block, such a linear algorithm on the full blockchain height is prohibitive. However, it can be optimized by precomputation: walk that block index branch once up front for the block, setting a temporary flag on each visited node, similar to the current "is on the active chain" status. [^]

This is quite the write up, well done and looking forward to the deep dive, my appetite is well whet.

To add context in case someone else in the readership thinks they've run into similar data corruption, this was first uncovered by running all the blk0***.dat files on the node's backup's disks through sha1sum and then diffing them.

This gets overlooked by the Power Rangers and their muggle followers to such an alarming degree your concise, clear magic alien dragon being of pure energy description is quite refreshing.

Good point.

Comment by Robinson Dorion — 2024-03-18 @ 02:26

Ah right, possibly the "cat >/dev/null" is a mis-reconstructed memory and it was just checksumming the local files that showed the read error; pretty much the same thing. I'd quite expect the errors in debug.log failed to mention the file causing them.

You're referring to the belief that "no one would dare to unwind our soft forks, too many butts would be hurt and ours especially so therefore it can't happen"?

Then again, it's not even just the power rangers (to the extent anyone cares to distinguish, as if a spamming attack on bitcoin codebase maintenance is somehow totally different from a denial of service attack on same); let us not forget:

Comment by Jacob Welsh — 2024-03-18 @ 20:24

We did that too, but it was just confirmation after the checksumming failed.

Myeah. It certainly didn't stand out from all the noise otherwise in there. If you have something in mind to grep, I still have a copy of that log.

Yes.

Nice find from the archives. Stan wishing for moar magics numbers (practical ones, ofc ofc) is quite off-putting indeed.

Comment by Robinson Dorion — 2024-03-18 @ 21:21

To my mind it goes deeper than just "magic numbers", in the sense of sloppy/lazy/fragile coding, but to a fundamental difference of approach, which is what I was getting at with the "honest nodes" thing. Do we want something that *is* Bitcoin, or something that *looks like* Bitcoin as long as you don't look at it from outside the safe and controlled lab conditions?

Comment by Jacob Welsh — 2024-03-19 @ 04:21

Great write up and an interesting find. I also await the deep dive.

I must say though that this whole ordeal made me think of points two and three from the six worst ideas in computer security.

Is addressing issues as they occur really the right way to go about understanding/maintaining the bitcoind codebase? It seems that this article is a (successful!) swing of the hammer in a game of whack-a-mole.

I remember a while ago there was discussion about writing 'trbi'. This was to be a version of bitcoind done the right way, although iirc it also included changes to the protocol. Maybe writing a bitcoind that is compatible with the current chain in order to more intimately understand all the problems satoshi had to work through and then afterwards mapping/annotating all of his code is a better way to get bitcoind closer to the ideal than addressing problems as they occur.

Comment by whaack — 2024-03-19 @ 05:12

The better way to go about it would be *proactively* - spending time on it regularly, designing tests, cutting out the cruft before it becomes strictly required for fixing things etc. And to some extent we're doing that already, e.g. here I suppose anyone else in the industry would simply have said "restore it all from your last backup and leave me alone" rather than following the stink to discover a deeper problem and resolving it. To my mind we're maintaining the code because we need it for ourselves and for our clients, but we haven't as yet found a way for bitcoin nodes as such to generate enough cash flow to get more than the minimum attention, much as I would like to.

I don't see a connection here to the goose chase / rat race of enumerating badness. The amount of badness in the code, while perhaps large, is bounded (and note that I've already cut out large swaths in single strokes such as boost libraries).

As far as a rewrite from scratch, it's a seductive idea but uhm... have you ever tried reimplementing a complex protocol with no spec, a messy reference and an absolute requirement for full compatibility ? I think you overestimate how green is the grass on that side. From my own experience, I couldn't even get a rewrite of my own Scheme interpreter to full compatibility much less acceptable performance after about a month of luxurious undistracted hacking. The core tends to be fun and easy but the periphery is large (the long tail of the details).

Most of the problems Satoshi had to work through were of his own creation. The sadness is that it's an ugly, kludgey prototype for a jumble of half-baked ideas that got stuck promoted to the role of production implementation of the one great idea. But that's the reality we have to work with.

Comment by Jacob Welsh — 2024-03-19 @ 17:54

Good question, being more proactive with the work and bringing on more people to help with it is a priority. First step is I need to publish the higher level road map with concrete steps to get there. Being more deliberate with the work can also raise the odds of improving the cashflow to support the work.

As far as the fabled trbi, perhaps that's the holy grail, but how does one get there ? From our perspective, the manageable way to go about it is to clean up the Satoshi codebase as much as possible such that the protocol can be understood well enough to be specified.

Comment by Robinson Dorion — 2024-03-19 @ 19:34

There's a distributed protocol in deployment today where you could bring argument to Stan personally, supposing you have the temerity.

Comment by Michael Trinque — 2024-03-20 @ 17:32

[...] we left off, a stuck bitcoind instance had provided a fortuitous test case of the code's behavior under a deep [...]

Pingback by Auditing bitcoind for concurrent database objects: the call graph from hell « Fixpoint — 2024-03-20 @ 20:49

@Michael Trinque: or there's a public forum right here where he could bring argument to me, supposing he has same "temerity". What of it?

Each of us decided years ago not to work with the other, and for significant reasons it would appear. I have no desire nor need to "reprogram his head" nor convince him of anything and so I leave him alone.

To the extent I quote him now, it is for my own needs to speak my truth, to clarify and differentiate my approach where past association could otherwise be misconstrued.

Comment by Jacob Welsh — 2024-03-20 @ 21:07

@Michael Trinque

To quote the prior exchange Jacob mentions :

I supposed it's not a surprise, but par for the course he incorrectly inferred personal attack where there wasn't one. I read Jacob to be saying prb is doing the spamming on the codebase, which pretty much all would agree with. If you take the time to actually read the link on denial of service attack and the article it's from in comment #2, you may learn for the first time your nodes remain vulnerable to the DOS attack you think was patched years ago, a patch which was never included in the official 'tbf' tree anyways.

Perhaps a better use of ya'll's time than assuming we're scared of talking and ought "nut up" would be to get off your high horse and start looking into the documented vulns your nodes

runlimp with and/or lower hanging fruit like fixing all those oh so many broken links on your "how to build".P.S. What's one reason Jacob or anyone else should go to Stan wrt Bitcoin maintenance when there's a direct quote from #therealbitcoin above wrt his expressed and later signed move to "cement" betrayal of the Bitcoin protocol in his tree and ignore any chain that comes along with a deeper fork than the magic number he coads or allows users to configure ? How is that betrayal of the protocol substantially different than USGavin's ?

Because he's your supposed friend whereas Gavin's your enemy ?

Comment by Robinson Dorion — 2024-03-20 @ 23:03

Since evidently I imported a little too much context to be comprehensible, I'll clarify:

It's not even just the power rangers who deny the possibility of deep reorgs / fork unwindings, since there's the once supposed alternative playing the part of controlled opposition by doing the exact same thing. So no, I don't call TRB/TBF people and Stan in particular the code spammers, but I do call them the DoSers, sitting all those years in the seat of power, privilege and publicity granted by MP's say-so and doing jack shit with it: not just by the broader goal of community development and outreach but even by their own narrower self-professed goal of codebase cleanup. Or telling whoever came by with difficulties building or running the code that it was their own problem until proved otherwise by extensive effort (and not just regarding Bitcoin or with newbies). Or as already linked (nao with moar context), misrepresenting a vulnerability as having been closed.

But I can quite forgive the reader for confusing who I was putting in which category of attack, that being the larger point after all - who cares, they're not worth distinguishing outside of entomological interest.

So no, I have no further use for Stan's opinion on anything; or how did it go? "I do not bite my thumb at you, Sir. But I do bite my thumb, Sir."

Comment by Jacob Welsh — 2024-03-21 @ 00:03

[...] diagnosing and measuring the problem, considering options, and mapping the minefield, all that remains is to Make It So. Given the state of the codebase [...]

Pingback by Dropping BDB locking, bitcoind finally follows the Bitcoin protocol « Fixpoint — 2024-03-22 @ 05:30