Check kiting is a well-known type of attack on a banking system that exploits the time difference between two nodes' views of events, allowing value to be conjured out of thin air and, absent some kind of intervention, maintained indefinitely by writing checks back and forth, much like one might keep a kite aloft by running in circles.

By doubtless imperfect analogy, I'm using "DNS kiting" to refer to a situation I've just observed in the field in Domain Name System operations, where a server that was at one time the legitimate authority for a zone can maintain the appearance of authority indefinitely, in the view of sufficiently active caches, by refreshing the cached records of its own delegation, notwithstanding that the delegation was meanwhile revoked by the higher authority. It's perhaps a subtle variant of the more general phenomenon of cache poisoning.

The cache in question is my local instance of dnscache from djbdns-1.05.(i) It's an implementation that prides itself on its security record and clean, correct approach dating from a time when the more popular competition was busy piling broken hacks on top of broken foundations. To quote the documentation:

dnscachedoes not cache (or pass along) records outside the server's bailiwick; those records could be poisoned. Records for foo.dom, for example, are accepted only from the root servers, the dom servers, and the foo.dom servers.

The question left unresolved here, to my mind, is: how do we know for sure what those foo.dom servers are? Logically, it cannot be "according to themselves" but rather "according to the root servers or the dom servers". How could it possibly be "in-bailiwick" for a server to vouch for its own authority? Other than at the top, that is, where the buck stops as it must, although the dnscache operator does enjoy the freedom to point it at whatever king he sees fit to recognize.

And yet, short of digging into the code, this seems to be exactly the behavior that I'm seeing. The sequence of events that aligned to made it possible are:

- The domain (rh3torica.com) initially used its registrar's courtesy nameservers (NameSilo; ns1, ns2 and ns3.dnsowl.com), so they were configured to answer queries for it;

- We then changed it to use a custom nameserver (run by me and, as it happens, running the "tinydns" server also from the djbdns package);

- I implemented a monitoring system to watch for changes on this among other domains, with the effect that my local dnscache is kept more or less continuously refreshed on it;

- The domain expired, at which point its NS records were presumably updated to point back to the registrar's own servers;

- The owner promptly renewed the domain, NS records were transparently restored and everything seemed fine;

- Some days later when I checked the monitoring system, I saw the domain had changed and was showing incorrect (outdated) data, sourced from the registrar's nameserver rather than the currently delegated one.

The current view from my desk is that the Top-Level Domain nameservers return the correct response:

$ dnsq ns rh3torica.com a.gtld-servers.com 2 rh3torica.com: 98 bytes, 1+0+2+2 records, response, noerror query: 2 rh3torica.com authority: rh3torica.com 172800 NS a.ns.rh3torica.com authority: rh3torica.com 172800 NS b.ns.rh3torica.com additional: a.ns.rh3torica.com 172800 A 198.199.79.106 additional: b.ns.rh3torica.com 172800 A 198.199.79.106

The local cache is seen still returning an incorrect delegation, with a high remaining TTL (time-to-live) close to the original two days:

$ dnsqr ns rh3torica.com 2 rh3torica.com: 92 bytes, 1+3+0+0 records, response, noerror query: 2 rh3torica.com answer: rh3torica.com 167471 NS ns1.dnsowl.com answer: rh3torica.com 167471 NS ns2.dnsowl.com answer: rh3torica.com 167471 NS ns3.dnsowl.com

My expectation was that the cache should agree with the TLD response, because it's been more than enough time for cached records to expire. The presumed cause of the divergence is that those "dnsowl" servers, while no longer in the live chain of authority, are still serving up the bad data pointing to themselves:

$ dnsq ns rh3torica.com ns1.dnsowl.com 2 rh3torica.com: 188 bytes, 1+3+0+6 records, response, authoritative, noerror query: 2 rh3torica.com answer: rh3torica.com 172800 NS ns1.dnsowl.com answer: rh3torica.com 172800 NS ns2.dnsowl.com answer: rh3torica.com 172800 NS ns3.dnsowl.com additional: ns1.dnsowl.com 7207 A 162.159.26.136 additional: ns1.dnsowl.com 7207 A 162.159.27.173 additional: ns2.dnsowl.com 21600 A 162.159.26.49 additional: ns2.dnsowl.com 32400 A 162.159.27.130 additional: ns3.dnsowl.com 21600 A 162.159.26.234 additional: ns3.dnsowl.com 43200 A 162.159.27.98

Running a dnstrace returns nothing untoward to my eye, in particular the correct nameservers are found and the string "dnsowl" doesn't show up anywhere in the output.

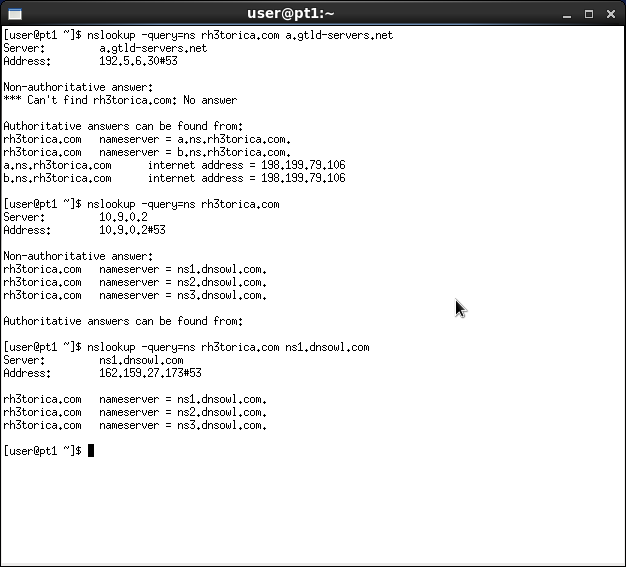

I had the domain owner try to fix the supposedly inactive zone records at the registrar but everything showed correctly in their control panel; while the old registrar-hosted zone data was still available for viewing, it did not list any NS records; apparently those are managed automatically elsewhere. He also contacted customer support who predictably insisted that it "works for them", blaming it on my cache and evading the point that their own servers are still publishing an incorrect delegation. With a little charity, I can see both sides: it sure does look like a real problem with the cache logic; but then what am I supposed to do, fix all the code around the Internet, when it could far more readily be addressed by them desisting from publishing bad data ? Anyway, out of this effort come the same results seen above for the screenshots-and-BIND-or-it-didn't-happen crowd:

Finally, the relevant lines from my dnscache log, as far as I can tell:

2023-08-08 13:40:40.076788500 nxdomain a29f1b62 2560 rh3torica.com. ... 2023-08-08 15:43:44.584879500 rr a29f1b82 172800 ns rh3torica.com. ns1.dnsowl.com.

The djbdns log output is brutally cryptic but for some help, a29f1b82 is the hex-encoded IP of the server returning the logged result, which comes to:

$ printf %d. 0xa2 0x9f 0x1b 0x82 162.159.27.130.

aka ns2.dnsowl.com as seen above. To seal the deal, here's the full log for a recent query, well over two days past the domain outage and renewal shown above. My read is that the once-valid authority keeps feeding us a delegation record (NS), which agrees with our previously cached one, and thereby refreshes its TTL to the effect that it'll never expire so long as we keep checking.

Anyone with knowledge on either the DNS protocol or the djbdns implementation is warmly invited to write in to the comments below to tell me how I've got it all horribly wrong. Preferably before I just go ahead and flush the cache and tag the thing "not so reliable as previously believed," because I have about zero desire to become even more of a DNS wizard than I already had to.

- Unmodified except for the addition of

-include /usr/include/errno.hto compiler flags for compatibility with its musl-libc/Linux host system. I probably updated the root servers list at/etc/dnsroots.globaltoo. [^]

While cooking up a gport for djbdns and looking into the available patches in general, I find I'm not in fact the first to discover this (or something quite like it) and there's a fix. Looks like it was a more general blunder affecting multiple DNS implementations, coming up only in 2012, quite late in that game.

Otherwise...

There's also an acknowledged security bug, albeit for uncommon scenarios, which Bernstein himself patched as late as 2009 and awarded his bounty to the reporter Matthew Dempsky.

Jonathan de Boyne Pollard has published a number of patches; I wasn't too impressed with his descriptions of the supposed problems but it might be good to have on hand at least.

This "PJP" guy who tried very hard to make it look less like a djb program and shoehorn it into fedora/github world has collected some patch refs.

Then there's this nameless patch collection.

I expect the first two patches mentioned will go in, and I'm taking a look myself into the compiler warnings for potentially undiscovered portability issues.

Relatedly, the qmail gport has also received some needed attention; it was in Gales from the start but never used/tested there.

Comment by Jacob Welsh — 2023-10-01 @ 01:52

By and by we find some rationale from a different program in the suite,

In other words, ye olde "we made it go faster by not doing the work."

Indeed this led me to rediscover a bug affecting 64-bit big-endian systems. Otherwise there was gcc's builtin "puts" to defuse and some signed vs. unsigned int sloppiness that could conceivably be a problem at the 2G boundary, which seems unlikely to be remotely approached given the context but who knows.

These and all other interesting fixes I found are now in the port.

Comment by Jacob Welsh — 2023-10-30 @ 01:10